Over the past three+ months, my co‑author Angelika Steinacker and I have been deep in the weeds researching, brainstorming, threat‑modeling, and refining what a secure identity and access architecture should look like in the era of agentic AI. Today, I’m excited to share that our technical paper Governing AI Agents – An Agent-Aware IAM Framework, is now publicly available.

👉 Read it on ResearchGate: https://www.researchgate.net/publication/400396082_Governing_AI_Agents_An_Agent-Aware_IAM_Framework

Why we wrote this

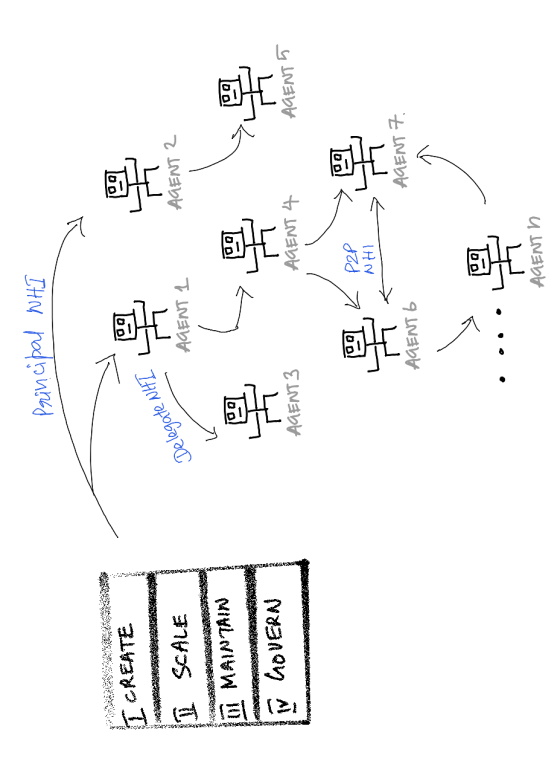

Agentic AI systems introduce Autonomous Non‑Human Identities (A‑NHIs)—entities that operate with autonomy, make decisions at machine speed, and collaborate across applications, APIs, and other agents. These behaviors fall far outside what traditional IAM was designed to handle.

Across our research, we observed consistent gaps in current IAM systems:

- Reliance on static credentials

- Lack of fine‑grained, purpose‑aligned authorization

- Limited visibility into multi‑hop agent delegation chains

- No robust way to establish dynamic cross‑domain trust

- Insufficient mechanisms for end‑to‑end provenance

What this paper contributes

We propose an Agent‑Aware IAM model built on extending and fully implementing the Identity Fabric. The result is a four‑layer deployment architecture designed specifically for agentic environments:

- Identity Foundation — verifiable agent identities, ephemeral issuance, ownership, and purpose metadata

- Trust & Federation — dynamic cross‑domain trust using VCs, DIDs, token exchange, and trust brokers

- Security & Privacy Enforcement — intent‑aligned authorization, JIT access, privacy safeguards, and drift detection

- Lifecycle & Observability — full provenance: agent → token → task → data → decision

We illustrate these layers through a credit‑scoring + order‑management multi‑agent system, showing how secure, audited flows can be constructed end‑to‑end.

A collaboration worth highlighting

This work came from months of intense technical deep‑dives, design sessions, and constant iteration. Collaborating with my co‑author Angelika Steinacker made this intellectually exciting and extremely rewarding — discussions ranged from identity proofs and decentralized trust to model attestation, SBOM linkage, and federated governance.

Looking ahead

As enterprises move toward multi‑agent ecosystems, we believe trust—not raw capability—will define what can scale safely. Identity, policy, and provenance must become the control plane for autonomous digital workflows.

As I mentioned in my previous blog post Rethinking Identity in the Age of Multi-Agent Systems, this is a very important field of study, within the Agentic AI Systems realm. And there will be more work we need to do, as Security Architects, to ensure these Agentic systems operate within boundaries we set for them.

Thank you to everyone who encouraged this work along the way.

I hope this Paper serves as a useful reference for Enterprise Security Architects, CISOs, IAM teams, and AI governance practitioners navigating this emerging space.