It would be an understatement if I say that the outcome of the recently concluded US election has been a shocker for many people in the US, and across the world. Also, as called out by various media outlets, these results have indicated the failure of the political polling/predictive analytics industries and the power of data and data science.

a) Predictive Models were wrong

- There is this adage which is widely accepted in the statistics world that “All models are wrong”. The reason for this stand is that ‘data’ beats ‘algorithms’, and that the models are only as good as the data used to validate them. But in this particular case, the models used, have been in use in polling predictions for decades, and its not clear to me on what went wrong with the models, in this case.

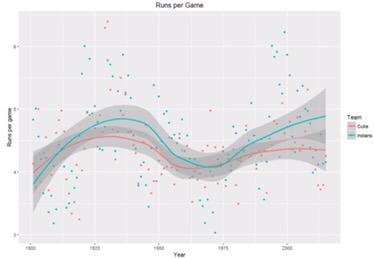

- Having said that, there is definitely some interesting work published in the last few weeks that show the use of Inference and Regression models in understanding the outcome of this election results. Here is a whitepaper published by professors in the Dept. Of Statistics at Oxford University. To summarize the paper:

We combine fine-grained spatially referenced census data with the vote outcomes from the 2016 US presidential election. Using this dataset, we perform ecological inference using dis- tribution regression (Flaxman et al, KDD 2015) with a multinomial-logit regression so as to model the vote outcome Trump, Clinton, Other / Didn’t vote as a function of demographic and socioeconomic features. Ecological inference allows us to estimate “exit poll” style results like what was Trump’s support among white women, but for entirely novel categories. We also perform exploratory data analysis to understand which census variables are predictive of voting for Trump, voting for Clinton, or not voting for either. All of our methods are implemented in python and R and are available online for replication.

b) Data used in the models was bad

- Not everyone will be open about their opinion, especially if the opinion is not aligned to the general consensus among public. And such opinions are usually not welcome in our society. A recent example of this is Mark Zuckerberg reprimanding employees for stating that “All Lives Matter” on a Black Lives Matter posting inside the Facebook headquarters. So there is a good chance such opinions wouldn’t have made it through to the dataset being used in the models.

- Groupthink also played a major role in adding to the skewed dataset. When most of the media and journalist agencies were predicting Hillary’s landslide victory over Trump, only a courageous pollster would contradict with the widely supported and predicted poll results. And so this resulted in everybody misreading the data.

- Incomplete analysis methods, which only used traditional methods of collecting data like surveys and polls, instead of using important signals from the social media platforms, especially Twitter. Social media engagement of the candidates with the voters, was a definitely ignored data set, inspite of social media analysts sounding the alarm that all of the polls were not reflecting the actual situation on the ground in the pre-election landscape. Clinton outspent Trump on TV ads and had set up more field offices, and also sent staff to swing states earlier, but Trump simply better leveraged social media to both reach and grow his audience and he clearly benefitted from that old adage, “any press is good press.”

To summarize…

Data science has limitations

- Data is powerful only when used correctly. As I called out above, biased data played the biggest spoil sport in the predictions in this election

- Variety of data is more important than volume. There is constant rage these days to collect as much data as possible, from various sources. Google and Facebook are leading examples. As I called out above, depending on different data sets, including social media, could have definitely helped in getting the predictive models to be closer to reality. Simply put, the key is using the right “big data”.

Should we be surprised?

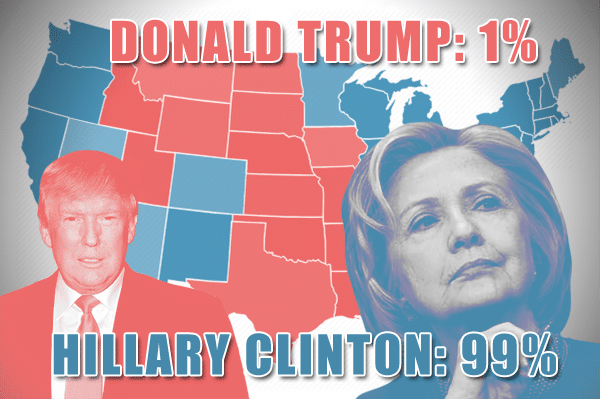

- To twist the perspective a little bit, if we look at this keeping in mind how probabilistic predictions work, the outcome wouldn’t be a surprise to us. For ex., if I said that “I am 99% sure that its going to be a sunny day tomorrow”, and if you offer to bet on it at odds of 99 to 1, I might say that “I didn’t mean it literally; i just meant it will “probably” be a sunny day”. Will you be surprised if I tossed a coin twice and got heads both times? Not at all, right?

- This New York Times article captures the gist of what actually went wrong with the use of data and probabilities in this election, very well. I think following lines say it all:

The danger, data experts say, lies in trusting the data analysis too much without grasping its limitations and the potentially flawed assumptions of the people who build predictive models.The technology can be, and is, enormously useful. “But the key thing to understand is that data science is a tool that is not necessarily going to give you answers, but probabilities,” said Erik Brynjolfsson, a professor at the Sloan School of Management at the Massachusetts Institute of Technology.

Title image courtesy: http://www.probabilisticworld.com